- Published at

How to Use Cloudflare Worker to Instantly Detect New Pages

Tired of manually checking for new pages, check out this handy Cloudflare Worker!

- Authors

-

-

- Name

- AJ Dichmann

- VP of Digital Strategy at Globe Runner

-

Table of Contents

Ever had a client quietly publish a blog post or service page… and you don’t find out until your monthly report? Yeah, us too—until we built a simple Cloudflare Worker to instantly detect new pages and notify our team in real time.

Here’s how it works (and how you can steal the idea) or skip right ahead to the code.

Why Detecting New Pages Matters for SEO

Search engines don’t always crawl new content right away, especially if the page has no internal links or backlinks. That delay can cost your client rankings, conversions, and visibility.

By catching new URLs right as they go live, we can:

- Make sure the content is optimized and meets brand standards

- Submit the page to Google Search Console immediately

- Promote it on social or via ads that same day

- Start building internal links and tracking performance

- Let the content team know it’s live so they can update Asana

How Our Worker Script Works

I wrote a Cloudflare Worker that checks client XML sitemaps on a schedule (hourly, daily—your call). It pulls the list of <loc> URLs, compares them to the last cached version, then sends a notification when anything new is detected.

Right now I gets alerts in Discord, but we’re also syncing notifications to Asana so we can drop new pages directly into the content or SEO queue.

Common Sitemap File Names to Watch

If your client is using WordPress with popular SEO plugins, their sitemaps probably live at one of these paths:

/sitemap_index.xml(Rank Math and Yoast)/sitemap.xml(Shopify and others)/page-sitemap.xml,/post-sitemap.xml,/category-sitemap.xml(various plugins)

We built in support to scan multiple sitemaps if needed, or you can just feed it the main index file and let it handle the rest (including child sitemaps).

Why This Beats Paid SEO Tools (and It’s Free)

Tools like Ahrefs, Screaming Frog, and Sitebulb are awesome — but none of them notify you when a client silently publishes new content unless you’re constantly crawling their site.

This Cloudflare Worker runs for free at the edge. No server. No subscriptions. Just fast, private alerts when new content drops.

Want This Set Up for Your Agency or Clients?

I have rolled this out for all retainer SEO clients and it’s a game-changer. No more “surprise pages” and no more lag on content promotion or technical QA.

Need help installing or customizing it for your agency? Let’s chat. We’ll hook you up.

Cloudflare Worker Code and KV Setup

To set up the Worker and KV storage to monitor an XML sitemap for changes follow these steps:

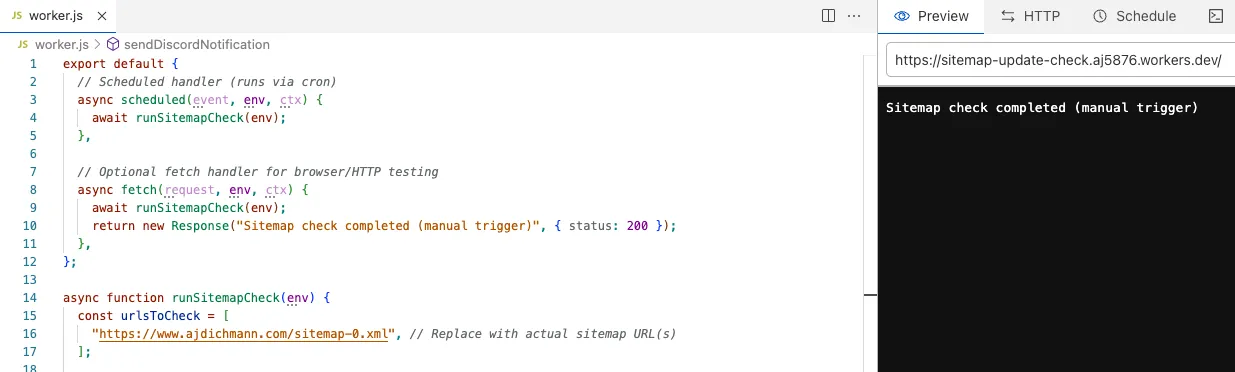

- Add the below JS as a Cloudflare Worker and update the urlsToCheck variable to add your XML sitemaps.

export default {

// Scheduled handler (runs via cron)

async scheduled(event, env, ctx) {

await runSitemapCheck(env);

},

// Optional fetch handler for browser/HTTP testing

async fetch(request, env, ctx) {

await runSitemapCheck(env);

return new Response("Sitemap check completed (manual trigger)", { status: 200 });

},

};

async function runSitemapCheck(env) {

const urlsToCheck = [

"https://www.ajdichmann.com/sitemap-0.xml", // Replace with actual sitemap URL(s)

];

let allNewEntries = [];

for (const url of urlsToCheck) {

const response = await fetch(url);

if (!response.ok) {

console.error(`Failed to fetch sitemap: ${url}`);

continue;

}

const xmlText = await response.text();

const currentUrls = parseUrlsFromSitemap(xmlText);

const cacheKey = `sitemap:${new URL(url).hostname}`;

const cached = await env.SITEMAP_KV.get(cacheKey);

const previousUrls = cached ? JSON.parse(cached) : [];

const newEntries = currentUrls.filter((u) => !previousUrls.includes(u));

if (newEntries.length > 0) {

allNewEntries.push(...newEntries);

await env.SITEMAP_KV.put(cacheKey, JSON.stringify(currentUrls));

}

}

if (allNewEntries.length > 0) {

await sendDiscordNotification(allNewEntries, env.DISCORD_WEBHOOK_URL);

console.log(`✅ New entries sent to Discord: ${allNewEntries.length}`);

} else {

console.log("🔍 No new sitemap entries found.");

}

}

function parseUrlsFromSitemap(xmlText) {

const urls = [];

const regex = /<loc>(.*?)<\/loc>/g;

let match;

while ((match = regex.exec(xmlText)) !== null) {

urls.push(match[1]);

}

return urls;

}

async function sendDiscordNotification(urls, webhookUrl) {

const MAX_MSG_LENGTH = 1900;

const msgContent = urls.join("\n").slice(0, MAX_MSG_LENGTH);

const body = {

username: "Sitemap Watchdog 🐾",

content: `🔔 **New URLs Detected:**\n${msgContent}`,

};

await fetch(webhookUrl, {

method: "POST",

headers: { "Content-Type": "application/json" },

body: JSON.stringify(body),

});

}

-

Attach a KV Storage with the name SITEMAP_KV

Binding a KV storage to the Worker -

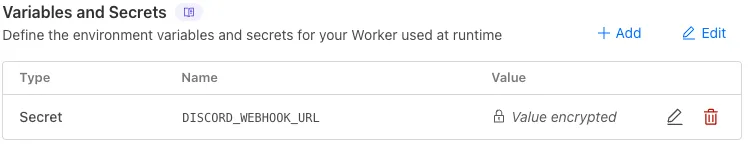

Add a Environment variable name DISCORD_WEBHOOK_URL with your Discord webhook for notifications

Discord webhook URL as a Secret -

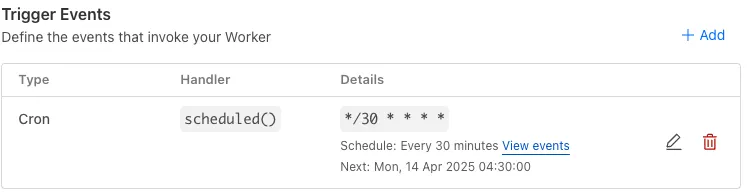

Add a Trigger to run the script once every 30 minutes with this expression

*/30 * * * *

Chron job trigger

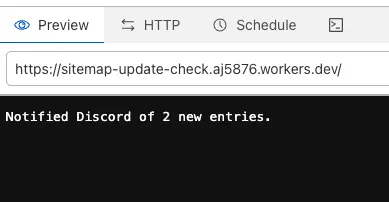

If the script is successful and find new pages you should see a message like this:

TL;DR:

This tiny tool saves us time, prevents SEO blind spots, and keeps our workflows tight. And the best part? It’s free.

AJ Dichmann

VP of Digital Strategy at Globe Runner